Improving My AI Side Project

Why I Built An AI App

Almost a year and a half ago, I launched a Generate Coloring Sheets web application. The idea behind it was to provide my children with an endless supply of coloring sheets. My son in particular was always asking for masks to color, and there are only so many (surprisingly) you can find out in the wilds of the internet.

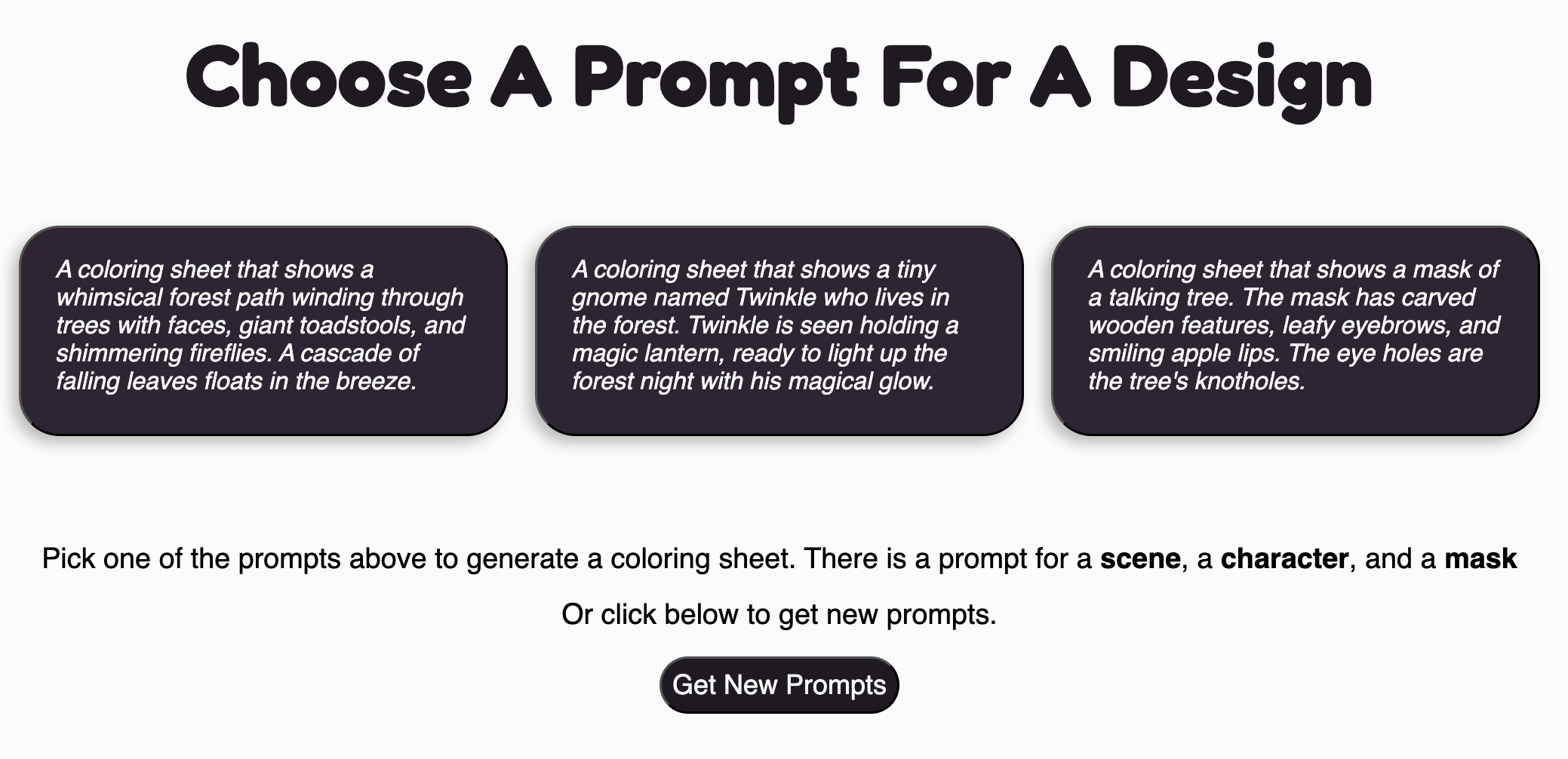

The first iteration of the app worked reasonably well. It presented you with three prompts for a coloring sheet: one for a scene, one for a character, and one for a mask. You could choose to generate a coloring sheet from one of these prompts, or choose to get another set of prompts.

The decision to use set prompts instead of user input was one I made early to both rid myself of the concern of NSFW input or misinterpretation, and to hopefully get some ideas that my kids and I would have never thought of. This worked, for about the first day.

Every time we used the app, my children and I would have to cycle through the same versions of prompts we had seen before. Despite using OpenAI’s chat history feature with instructions to not repeat things, this would still happen.

So a week ago, with some spare time, I set out to address this problem

Fixing Repetitive Prompts

To get better prompts, I went back and looked at my original API request to get the three prompts. To me, it clearly stated what I wanted:

Generate three prompts for children’s coloring sheets, intended to be used with the OpenAI IMAGE API (do not generate the sheets). Each prompt should be two sentences long and begin with ‘A coloring sheet that shows’. Each sentence should short, simple and concise. One for a scene, one for a character, and one for a mask. Format your response as a JSON object with three keys: scene, character, and mask. Each key should have a string value.

Following prompts would include instructions to not repeat previous responses:

Generate three more prompts for children’s coloring sheets. Do not repeat any prompts from the previous responses. Each prompt should be …

However, after reading a bit more about “prompt engineering” and how to write better prompts, it became clear I had to give the AI better, more explicit instructions. With some help from ChatGPT, I changed my prompts to the following:

Generate three original prompts for children’s coloring sheets.

- Each prompt should be two short sentences, starting with “A coloring sheet that shows”.

- Each should be simple and age-appropriate for children ages 4-8.

- One prompt should depict a scene, one a character, and one a mask.

- Format your response as a JSON object with three keys: “scene”, “character”, and “mask”. Each key should have a string value.”

The follow-up prompt:

Generate three new and distinctly different prompts for children’s coloring sheets.

- Do not repeat any themes, concepts, or wordings from previous prompts.

- Each prompt should be two short sentences, starting with “A coloring sheet that shows”.

- Each should be simple and age-appropriate for children ages 4-8.

- One prompt should depict a scene, one a character, and one a mask.

- Be imaginative! Avoid common topics like cats, dogs, unicorns, or rainbows if they’ve been used recently.

- Format your response as a JSON object with three keys: “scene”, “character”, and “mask”. Each key should have a string value.”

After updating the prompts to this, I saw better results. The prompts were more varied. However, they still had a sense of “sameness”, in that the same ideas or themes were always present, even if the prompts themselves were more diverse. It was always unicorns, snowmen, and pirates. Numerous pirates for some reason.

In a forum, I had come across the concept of “seeding” the prompt: inserting a random factor to help influence the results. The idea seemed promising.

For my purposes, the random seed would be a theme. Undersea adventures, Halloween, Baby Animals, Magical Castles, etc. I generated a list of 50 themes, and with each prompt, a random theme would be inserted.

This resulted in even better prompts. Now I was cooking.

I also added some properties to the API request that would, according to the documentation, increase the creativity and randomness of the responses: temperature and top_p.

Temperature affects both creativity and randomness, while top_p controls randomness via probability mass sampling. Temperature adjusts how confidently the model picks its next word, with lower values increasing predicability and deterministically, and higher values making them more random and creative. Whereas top_p limits the model’s choices to a combined threshold (in my case 95%), and picks randomly from that set.

It should be noted that OpenAI recommends only using one of these in both cases, since using both can lead to very unpredictable results. However, that is what I was going for, so I’ll leave it like this for now.

Improved prompts above

Improved prompts above

A forest gnome

A forest gnome

Intermittent Failures

One issue I was still having was the prompt request would intermittently fail, and it wasn’t immediately clear where the break was happening.

The entire lifecycle of a prompt request was this:

- User selects a prompt

- React app makes a request to /api/prompt

- Request hits an Ubuntu web server, which directs requests to a Node Express server.

- The Express server instance makes a request to the OpenAI API.

- The Express server handles the success/failure of the OpenAI API request, and sends success/failure back up to the client.

The problem was happening somewhere in steps 4 and 5, with some malformed JSON.

First, I dug into the request to the OpenAI API. After logging the request body and tailing some server logs, I was pretty sure it wasn’t happening there. The JSON looked fine.

So I moved on to how the API response was handled. After tailing the server logs, taking a look at the response from the OpenAI, I located the issue. Remember how in my prompts, I have this line: Format your response as a JSON object with three keys: “scene”, “character”, and “mask”.

Well, the AI followed these instructions about four out five times. On that fifth time, it would send the response back as a numbered list, or just paragraphs. I guess it hated consistency.

Not being able to get the AI to be consistent with JSON was a big problem. After digging into the API docs a little deeper, thankfully I found a solution. The API had a “functions” feature where you could define “function schemas” and tell the API which of these schemas to use when it formatted its response.

So, I defined my functions:

const functions = [

{

name: "generate_coloring_prompts",

description: "Generate coloring sheet prompts for children ages 4–8.",

parameters: {

type: "object",

properties: {

scene: {

type: "string",

description: "A prompt for a coloring sheet that shows a scene.",

},

character: {

type: "string",

description: "A prompt for a coloring sheet that shows a character.",

},

mask: {

type: "string",

description: "A prompt for a coloring sheet that shows a mask.",

},

},

required: ["scene", "character", "mask"],

},

},

];

This ensured that the response was always a JSON object that followed this schema.

A Happy Ending

After these changes, Generate Coloring Sheetswas a lot more fun for my children to use. Instead of the same boring old superhero and pirate masks, they were getting time traveling turtles, talking superhero footballs, undersea kingdoms, and octopus astronauts. They are happily coloring away, and I’m happily watching (and enjoying some spare time).

Curious what it generates now? Try it yourself—your kids might surprise you with their favorites.